Data Engineering

Data engineering is the practice of building, maintaining, and scaling the infrastructure and systems that support the collection, storage, and processing of data. It is an important aspect of DevOps as it allows organizations to gather and use data to make informed decisions and improve the performance and reliability of their systems.

Courses:

Apache Kafka Tutorial for Beginners | Edureka

What is kafka

A Cloud Guru Data engineering

Certification

- Learn: the basics of data engineering: The first step is to understand the basic concepts of data engineering, such as data warehousing, data modeling, and data pipelines.

- Learn: how to use data warehousing tools: Learn how to use data warehousing tools such as Amazon Redshift, Google BigQuery and Microsoft Azure Synapse Analytics to store and manage large amounts of data.

- Learn: how to use data modeling tools: Learn how to use data modeling tools such as ERwin and ER/Studio to model and organize data.

- Learn: how to use data pipeline tools: Learn how to use data pipeline tools such as Apache NiFi, Apache Kafka and Apache Airflow to collect, transform and move data between different systems.

- Learn: how to use data visualization tools: Learn how to use data visualization tools such as Tableau and Power BI to create interactive dashboards and visualizations of your data.

- Learn: how to use data governance tools: Learn how to use data governance tools such as Collibra and Informatica to manage data lineage, data quality and data cataloging.

- Learn: how to use data security tools: Learn how to use data security tools such as Apache Ranger, Apache Atlas and Amazon Glue Data Catalog to protect your data and comply with regulatory requirements.

- Learn: how to use cloud-native data engineering services: Learn how to use data engineering services provided by cloud providers such as AWS Glue, Azure Data Factory, and Google Cloud Dataflow to build and manage data pipelines.

Week 1

Watch the initial general overview video on Kafka. (Link 1 in Courses)

Go through the Kafka Blog on Confluent (Link 2 in Courses)

- Perform the following exercise on local machine

- Download Kafka

- Setup Kafka Cluster

- Setup a Topic

- Produce/Consume any simple message using local producer and consumer scripts provided by kafka

- View the following blog and do some R&D on properties you see in Kafka config fig

Week 2

- Start watching the ACloudGuru Course on Kafka (Link 3 in Courses)

- Deploy a multi-node cluster. This blog sets up a 3 node cluster but on a single machine. We have to do it on three machines. Still give it a read to understand how inter cluster communication works.

- Use Emumba Learning Account on AWS

- Deploy 3 nodes

- Use t2.small or t2.medium instances

- Use any VPC already created

- Tag your resources with the following tags

- Purpose: For Devops Curriculum

- Created By: (Your Name)

- Shut down instances when not in use

- Create a topic on the cluster with 3 partitions.

- Develop a producer & consumer script using Python.

- Use confluent kafka python library (Link)

- Produce & consume messages on kafka topic using your custom scripts.

Week 3

- Understand Kafka Networking

- Configure Multiple Listeners

- Understand how listeners can be used to enable private and public communication with Kafka Brokers

Week 4

- Monitoring

Final Exercise

Please use the Emumba Learning AWS Account. Ensure that you turn off the machines when not working.

Deploy a 3 node Kafka Cluster with the following default properties

- Hardware: t2.medium

- OS: Ubuntu

- Disk: 30 GB

- Partitions: 15 partitions

- Replication Factor 3

- Store Kafka logs in ‘/kafka-data’ directory

- Retain Kafka Messages for 3 days

Create a Kafka topic called ‘topic1’ with the following specs:

- Partitions: 30 partitions

- Replication Factor: 2

Write a custom Kafka Producer script which does the following

Use any programming language. (Python preferred)

Generate continuous random messages (Sample below)

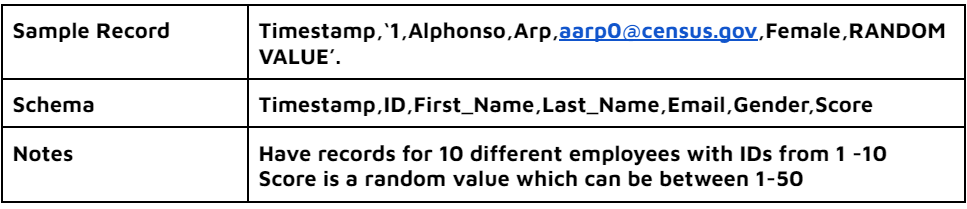

Sample- record

Produce the messages to the Kafka Topic. The data should be partitioned on the basis of ID. What advantages will this give us?

Export metrics for the Kafka Producer (Within script)

- Print number of messages produced per second

- Print total number of messages on topic

Write a custom Kafka Consumer script which does the following

- Use any programming language. (Python preferred)

- Consume the messages

- Write all messages for User ID 1 to a file called output.csv

- Export metrics for the Kafka Consumer (Within script)

- Print number of messages consumed per second

Use a simple Druid deployment

Connect Druid with Kafka Cluster

- Deploy Imply Private on K8s

- Search Auto-scaling middle managers (FOR IMPLY)

- Try configuring Druid Task Autoscaler

Consume messages from the Kafka cluster (topic1)

Aggregate the incoming data to get the total score by each employee in the last 5 mins.